|

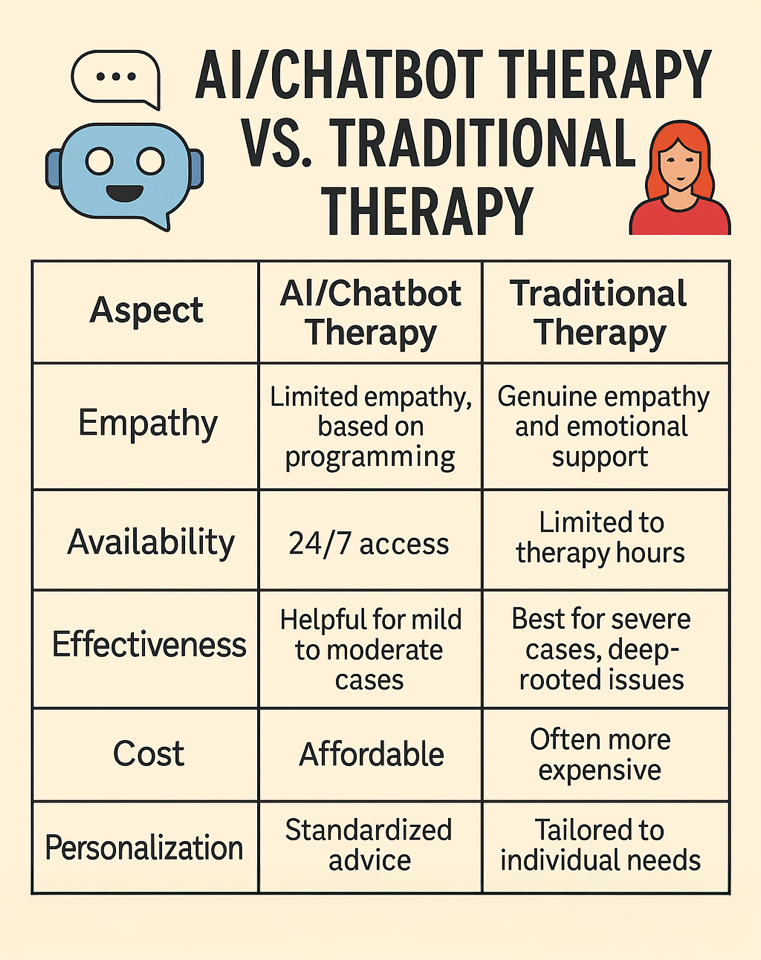

The Rise of AI in Mental Health Care In recent years, artificial intelligence (AI) and chatbots have gained a spotlight as potential tools for mental health care, particularly in treating disorders like depression and anxiety. As people seek more accessible and affordable treatment options, the idea of AI-driven therapy is quite appealing. However, one question that bothered me as a mental health nurse practitioner: Should people rely on these digital solutions to cure severe mental health disorders? Before you hand your emotions to an algorithm, let’s weigh the promise against the pitfalls. The Role of AI and Chatbots in Therapy Artificial intelligence and chatbots have come a long way in their ability to mimic human conversation and therapeutic techniques. Some of the most well-known AI-driven platforms, such as Woebot and Wysa, have gained popularity by offering users the opportunity to discuss their feelings and receive guidance based on cognitive-behavioral therapy (CBT) principles, which are grounded in the idea that thoughts, feelings, and actions are interconnected. Negative thoughts can make you feel worse, but CBT teaches you to challenge those thoughts, take small positive actions, and build healthier habits. It’s a practical, present-focused approach that provides you with tools to manage stress, confront fears, and feel better day by day. These platforms use advanced algorithms to analyze users' input, understand their emotional states, and provide responses designed to help them cope with mental health challenges. However, many mental health experts wonder whether these systems truly replace human interaction and professional mental health services. Benefits of AI Therapy and Chatbots Some potential benefits are: 24/7 Availability AI systems are designed to offer consistent guidance and support, delivering the same level of reliable assistance with every interaction. While these systems cannot fully replace the depth of human therapy or crisis intervention, they serve as a dependable companion, helping users feel supported whenever they need it most. Anonymity and Comfort Cost-Effectiveness Scalability Limitations of AI Therapy and Chatbots Perhaps the greatest limitation of AI in mental health lies in its inability to replicate human empathy. While chatbots can simulate understanding through carefully programmed responses, they cannot truly listen, validate, or connect on a deeply emotional level. Therapy is not simply about problem-solving—it is about creating a safe, trusting relationship where patients feel seen and understood. Research consistently shows that the therapeutic alliance—the bond between therapist and patient—is one of the strongest predictors of positive treatment outcomes. This is a dimension of care that no algorithm, however advanced, can fully reproduce. Equally important is the recognition that AI tools, though helpful in managing mild to moderate symptoms of depression or anxiety, are not designed to handle severe or complex cases. When deeper emotional trauma, suicidal ideation, or multi-layered psychological challenges are involved, the nuanced judgment and personalized care of a trained human therapist become indispensable. AI may guide users through breathing exercises or mood tracking, but it cannot navigate the intricate dynamics of trauma, abuse, or long-standing relational issues. As some studies suggest, over-reliance on automated systems could even risk delaying critical human intervention. AI-driven platforms may misinterpret or fail to recognize the complexity of mental health issues, leading to ineffective or even harmful advice. Another critical weakness of chatbot-driven mental health care is the lack of professional oversight and accountability. Unlike licensed therapists, who are bound by ethical codes and regulated by state boards, AI platforms operate in a gray area with no standardized safeguards. This issue came into sharp focus after a high-profile lawsuit involving an AI chatbot allegedly linked to a teenager’s suicide—a tragic reminder that mental health support cannot be left unregulated. This raises troubling questions about liability. Who is responsible if an AI gives harmful or misleading guidance? Therapy demands both professional expertise and emotional attunement. While AI can simulate techniques, it cannot replace the responsibility, nuance, and care that only a trained human therapist can provide. When Should You Use AI and Chatbots for Therapy? While AI and chatbots offer certain advantages, they should not be viewed as a substitute for professional mental health care. Rather, they serve as supplementary tools that complement traditional therapy. For example, some individuals undergoing treatment for depression have used chatbot platforms to practice coping strategies or reinforce techniques such as thought reframing between sessions. AI platforms have also been explored as a way to help manage mild symptoms of stress, anxiety, or low mood. For instance, users have reported finding value in features like guided breathing exercises or mindfulness prompts that support day-to-day well-being. In some cases, people have turned to chatbots for immediate interaction when experiencing distress. While these tools may provide comfort through calming exercises or supportive dialogue, it is essential to emphasize that urgent or severe emotional crises are best addressed by reaching out to a human-staffed hotline or a licensed professional. Is AI the Future of Mental Health Therapy? AI and chatbots can provide helpful support for people managing mild to moderate symptoms of depression or anxiety, offering convenience, affordability, and anonymity. Still, they cannot match the empathy, depth, and personalized care that human therapists provide. For those experiencing more serious mental health challenges, AI should be viewed as a supplement—not a substitute—for professional treatment. Research consistently shows that licensed therapists are essential in addressing root causes, while AI tools work best as supportive add-ons. Over-relying on automated platforms risks leaving critical needs unmet. Professional oversight and timely intervention remain key to lasting progress, reminding us that AI is only one piece of a larger care framework—not a standalone solution. (责任编辑:) |